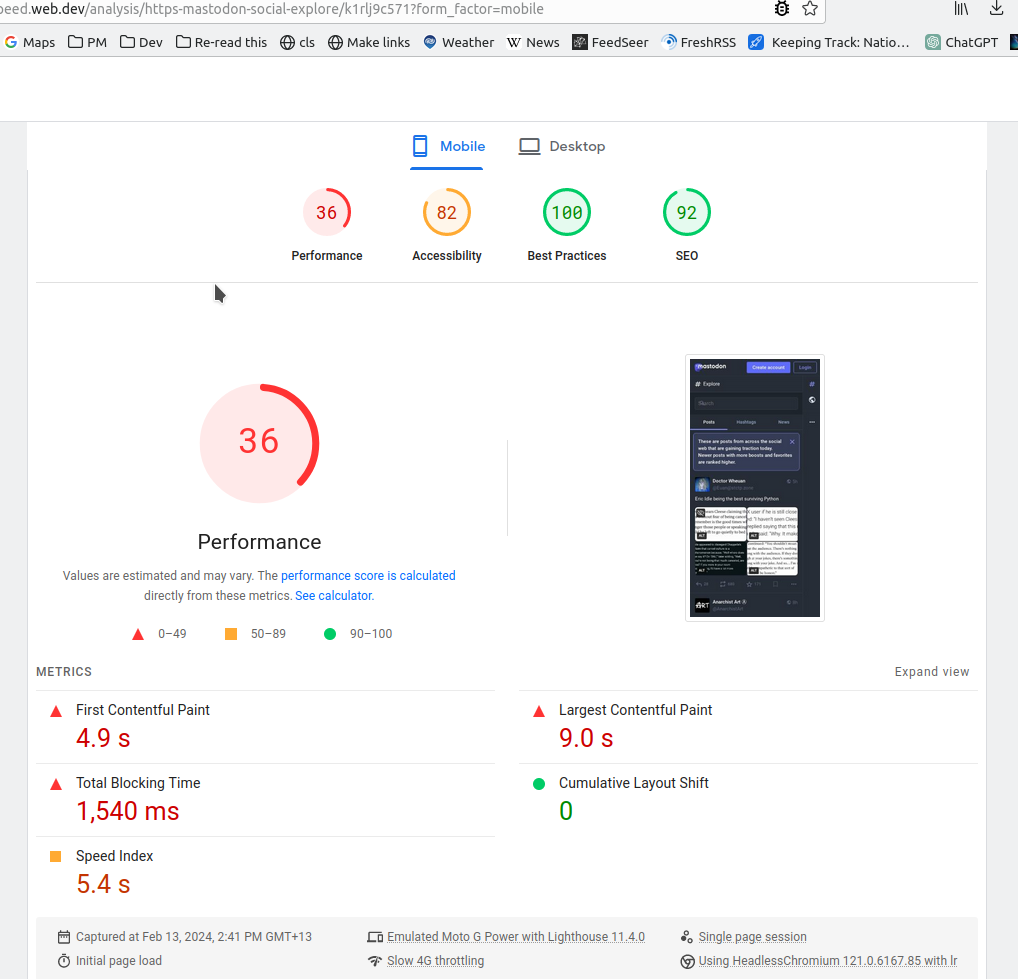

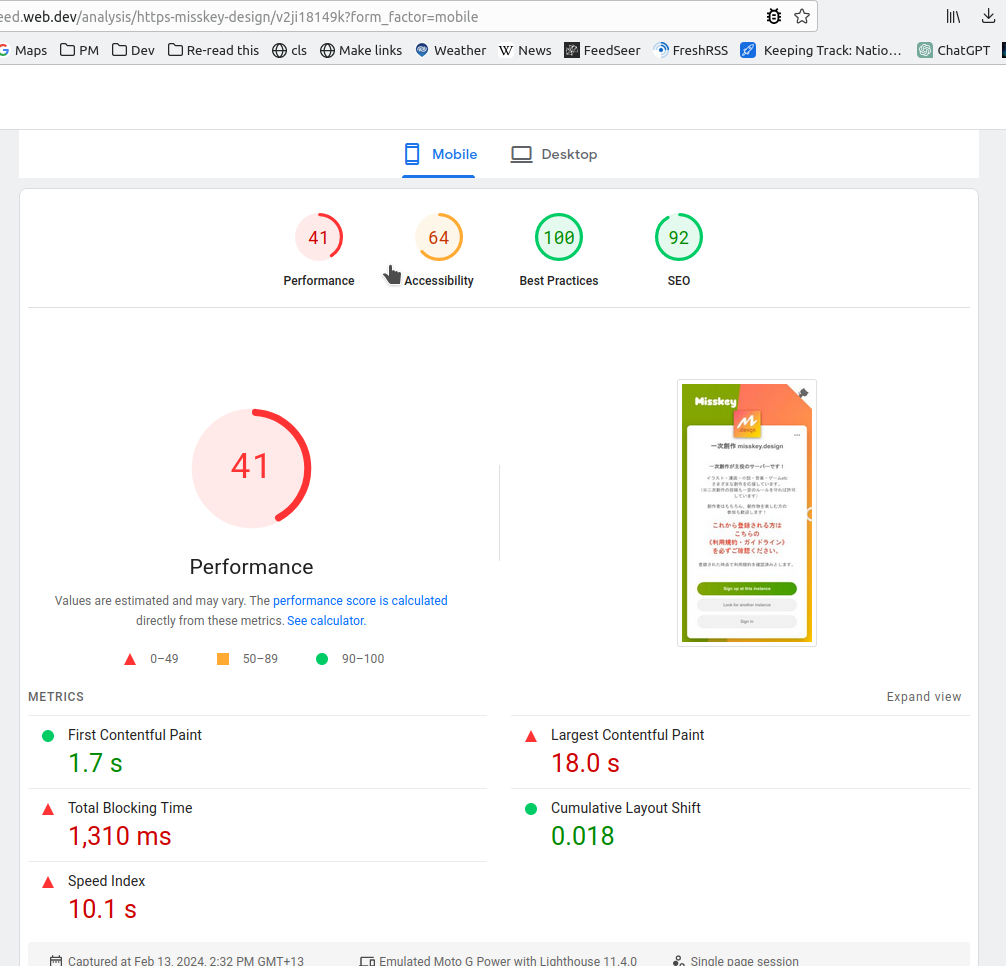

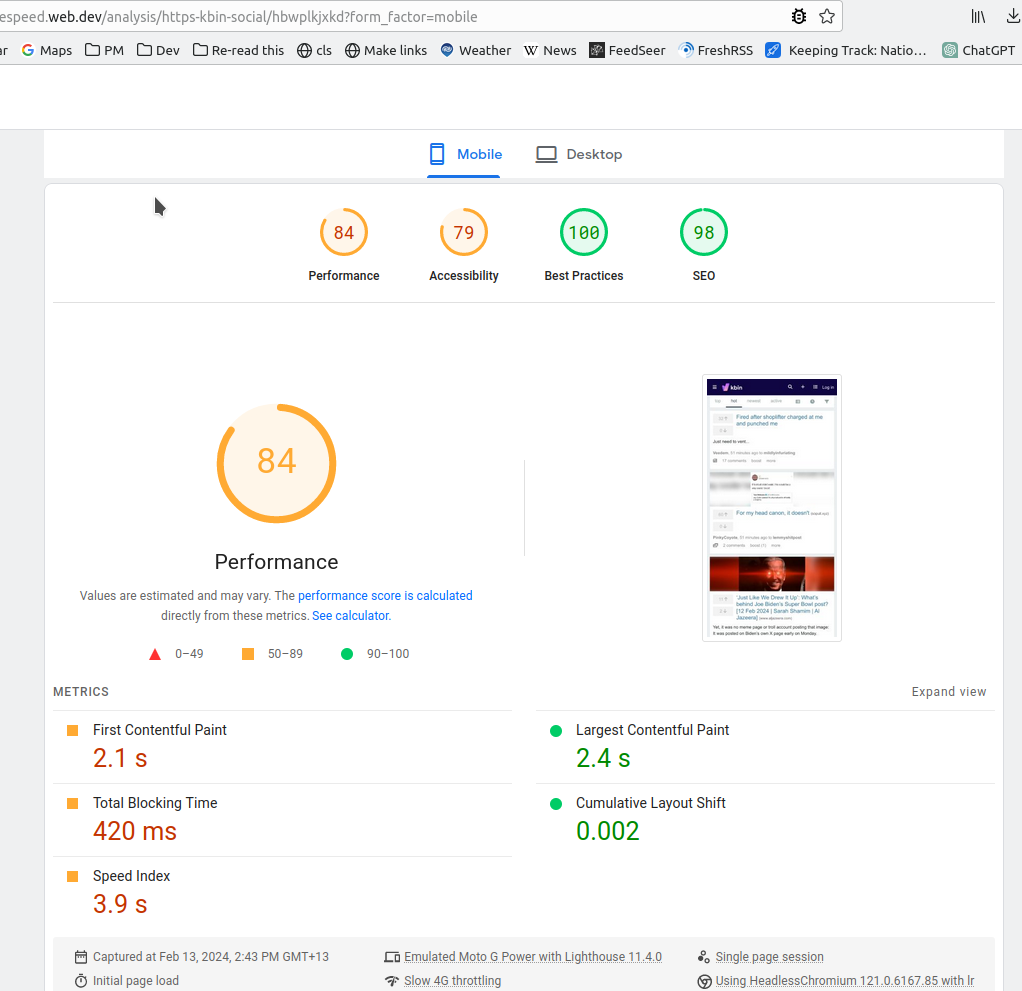

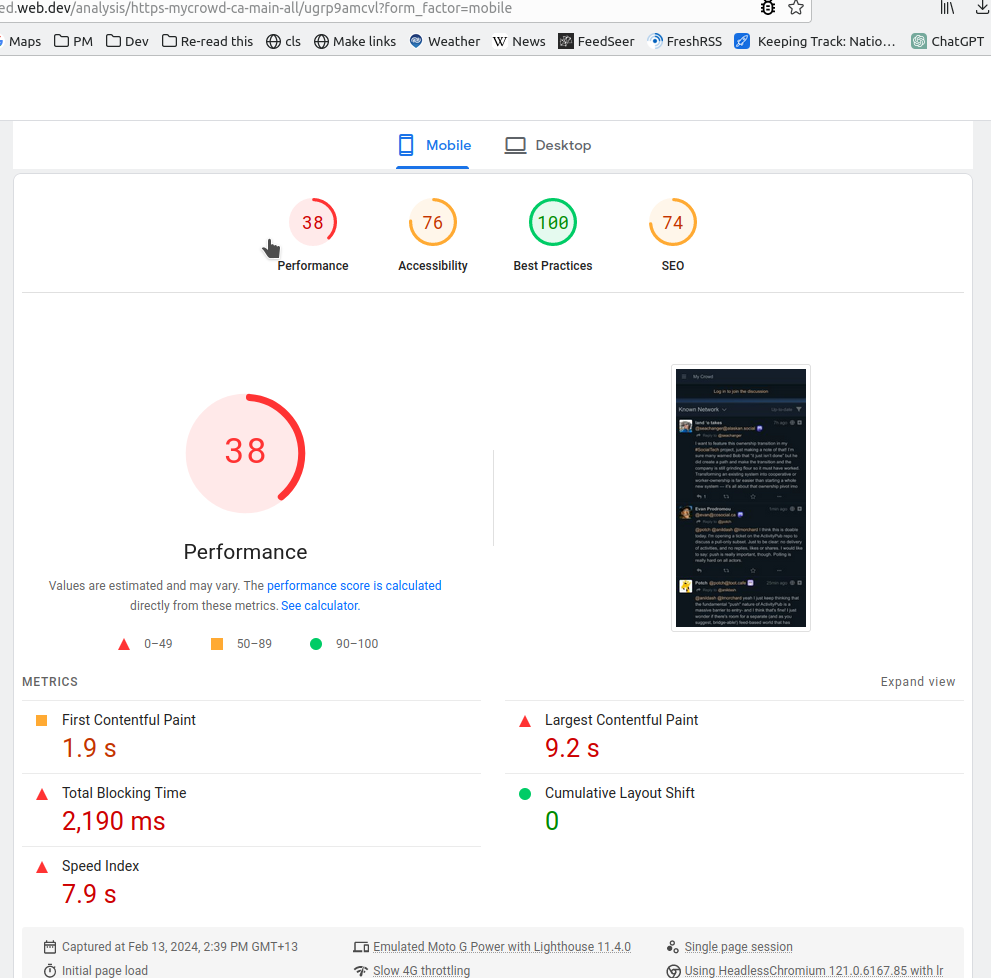

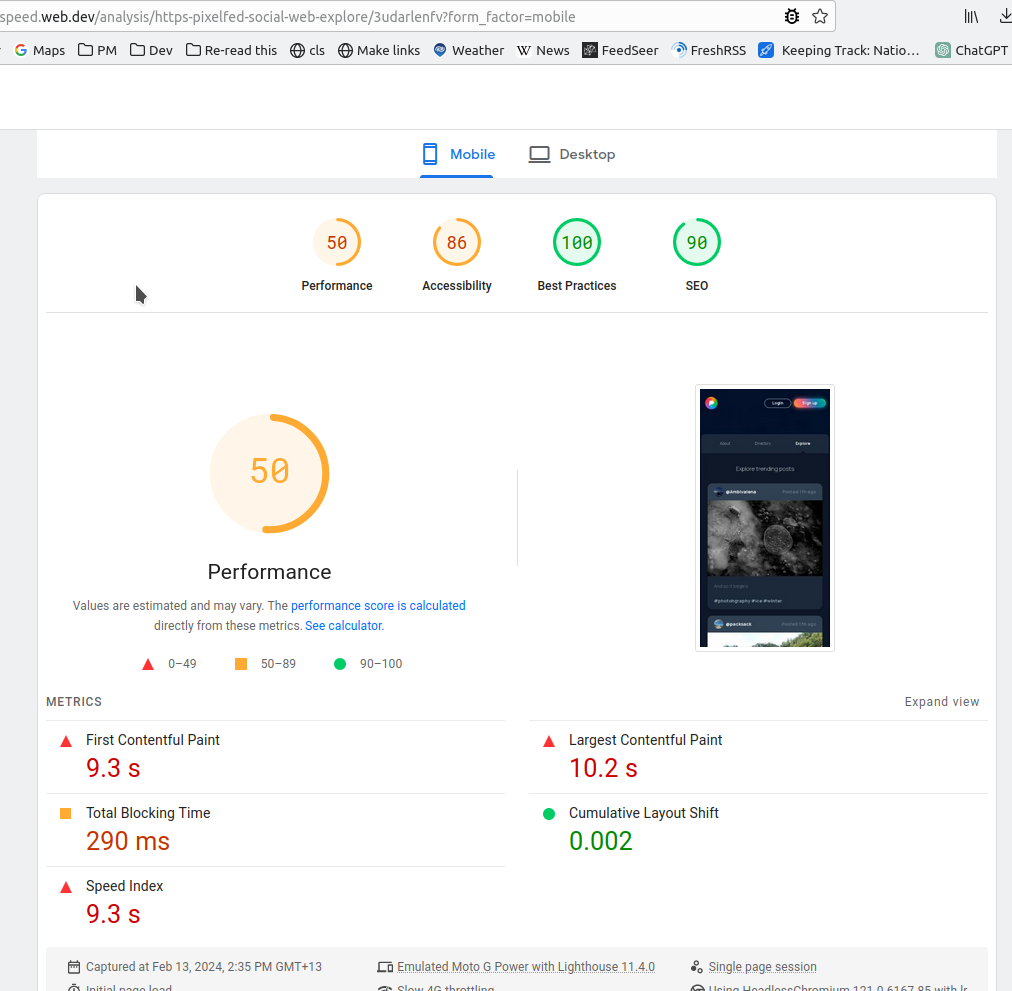

Google provides a tool called PageSpeed Insights which gives a website some metrics to assess how well it is put together and how fast it loads. There are a lot of technical details but in general green scores are good, orange not great and red is bad.

I tried to ensure the tests were similar for each platform by choosing a page that shows a list of posts, like https://mastodon.social/explore.

PieFed and kbin do very well. pixelfed is pretty good, especially considering the image-heavy nature of the content.

The rest don’t seem to have prioritized performance or chose a software architecture that cannot be made to perform well on these metrics. It will be very interesting to see how that affects the cost of running large instances and the longevity of the platforms. Time will tell.

I think that while Google’s PageSpeed rankings are a good way of identifying areas of improvement, they’re very much geared toward Google’s use case, and not necessarily that of any given site being tested. The tests are always run on a single page and treat things like CSS or JS files that address styles or features in use on other parts of the site as undesirable because Google doesn’t actually care about the overall performance of your site as people use it, merely how it functions in the scope of search results.

What I mean by this is that Google wants its users to be able to do a search, click a result, recognize quickly if it isn’t what they want, and then back up and try another result. That’s why they don’t care about caching CSS that you’ll use on multiple pages, or why they prefer particular methods of loading web fonts, even if those cause the fonts to load later and produce a flash of text changing on every page visited.

Meanwhile, social networking sites are premised on the idea that you’ll stick around, scroll through a lot of posts and visit profiles and settings pages, and Google isn’t testing for that kind of thing. It’s very hard to optimize a site for both end-users and Google, and Google makes it harder than it needs to be: using any embedded Google Javascript will lower your score because the tests prize long cache lengths and small streamlined files that only contain code that will execute on that page, but Google’s analytics, webfonts, charts, maps, etc. are all optimized for Google’s benefit.

Also, your test result screenshots are all for the mobile visitors of those sites, and many social media sites are primarily accessed via the web by large-screen users and via apps by mobile users. Google tests mobile browsers more synthetically than they do desktop by slowing down the network speed greatly and simulating a low-end phone, as they are addressing a worldwide audience, while any individual site’s users might be primarily in regions with faster cellular data rates.

I don’t mean to say that their tests don’t have value, just that you have to look specifically at what is being tested and ask yourself if choosing to make changes for Google’s artificial tests is a better use of your time than working on other parts of your site. (Also? Their accessibility scores can really give you a false sense of security if you aren’t doing dedicated testing!)

The bigger picture of how we ended up here

https://www.baldurbjarnason.com/2024/react-electron-llms-labour-arbitrage/

#react #electron #llm